Thursday, December 4, 2008

Business Data Catalog.

Gaaaaa!!

Lately Sharepoint keeps disappointing me. Here is another one.

We can not make BDC available to anonymous users. http://support.microsoft.com/kb/948729

Since I am using MOSS for an internet facing site, where majority of pages are supposed to be accessible anonymously, this is a big limitation.

And also, I just can not find the exahusted reference listing and explaining all possible values that a Property of an element in the application definision XML can take.

I expected that the BDC Definition Editor in the SDK would do the IntelliSense. But not. It just pops up a blank textbox where you have to type a valid value…

Friday, November 14, 2008

Customize list form

This is really still just my own memo.

To redirect after submit, add ?source=URL.

The official way (http://office.microsoft.com/en-us/sharepointdesigner/HA101191111033.aspx) of creating a custom list form, uses the DataFormWebPart.

It has a limitation. Attachment does not work. So it has to be disabled.

There seems unsupported workaround exists. http://cid-6d5649bcab6a7f93.spaces.live.com/blog/cns!6D5649BCAB6A7F93!130.entry

If it is just to apply the coporate masterpage, we could go just by replacing that of the default NewForm.aspx with whichever masterpage we want.

Attachment works.

BTW, NewForm.aspx uses ListFormWebPart. We can not customize it, or a very little. For instance, it seems we can not hide fields that we do not need users to fill in.

Input validation. http://rdacollab.blogspot.com/2007/07/custom-sharepoint-edit-forms-with.html

Labels:

Attachment,

Form customization,

List,

Sharepoint

Friday, November 7, 2008

My first experience with Subversion

On the Subversion server, I did:

- Create a folder for my repository, and

- Did TortoiseSVN “Create repository here …” on the folder.

This should be the equivalent of doing DOS> svnadmin create (path to the folder).

And on my PC,

- Create a folder structure in a temporal location, in the way that I want to organize my projects like below.

Category1\ Project1-1\ trunck

branches

tags

Project1-2\ trunk

branches

tags

Category2\ Project2-1\ trunk

branches

tags

etc.

- Then, import those Categories, specifying the repository’s URL i.e. http://(svn server)/svn/(repository)/.

I wanted to organize projects in categories in one repository, but seems they are igonored when I imported.

What created in the repository are only the projects, in flat structure, no categories…

Then, finally I import my VisualStudio solutions and projects as follows, always on my PC.

For each,

- Import the VS solution, specifying this time URL of the project’s trunk folder i.e. http://(svn server)/svn/(repository)/(project)/trunk.

# my categories were completely ignored…

Here is how to make changes into those projects, and save back into svn.

- First, I need to checkout the VS project from svn, into an empty folder of the name of the project.

# I can not make the change directly into the project from which I did the intial import.

- Then, make whatever modifications I need to do.

- Next, I could verify if nobody else has changed the project in the meantime, with check for modifications, or update.

- Finally, I commit the project.

Tuesday, October 7, 2008

Variations stopped

I suddenly realized that the Variations has stopped copying sites and pages from the source to the targets. No recent entries in the log…

Looked into the timer job status and difinition to find that nothing for this particular web application.

I stopped it once intentionally. Unchecked the “Automatic Creation” option. Then I was believing that I had started it again by checking it back.

Found this blog entry http://www.objectsharp.com/cs/blogs/max/archive/2008/02/25/missing-timer-job-definitions-after-sharepoint-move.aspx.

Although what triggerred it is different, the situation is the same. The jobs have disappearred.

I created a “cheating” publishing site collection in the web application in question. The jobs are back. Variations started again.

Looked into the timer job status and difinition to find that nothing for this particular web application.

I stopped it once intentionally. Unchecked the “Automatic Creation” option. Then I was believing that I had started it again by checking it back.

Found this blog entry http://www.objectsharp.com/cs/blogs/max/archive/2008/02/25/missing-timer-job-definitions-after-sharepoint-move.aspx.

Although what triggerred it is different, the situation is the same. The jobs have disappearred.

I created a “cheating” publishing site collection in the web application in question. The jobs are back. Variations started again.

Wednesday, October 1, 2008

FlexListViewer

http://blogs.infosupport.com/porint/archive/2006/08/15/9865.aspx

Have you ever wanted to have a list presented on a page, which resides in a site different from where you have the list?

I believe the list and view are one of the big selling points of Sharepoint. I like them very much. On the contrary, I do not like the Content Query Web Part. Out of question.

Our site is of multi-lingual. We use the Variations technique. Though there are many points of it that I am not happy. This, I believe is one of the biggest shortcomings.

If you say to your users that they have to manage a separate list for each different language, they would laugh at you.

FlexListViewer is my saviour. It allow to have a view displayed in the other site than the one the list belongs to.

Better even, the source code is available. There was a small point of it that does not really fit to our need. We are in the usual, stagin -> internet facing setting. We prepare pages in the staging, and the Content Deployment pushes them to the internet facing site. The out-of-box FlexListViewer, the view URL needs to be a full URL, starting with http://hostname. But in our case, that changes, in the course of deployment. So, do not want to really specify any host.

Thanks to the developper, who made the source available. I modified it so if the specified URL starts with “/”, it assumes the host it is on.

Have you ever wanted to have a list presented on a page, which resides in a site different from where you have the list?

I believe the list and view are one of the big selling points of Sharepoint. I like them very much. On the contrary, I do not like the Content Query Web Part. Out of question.

Our site is of multi-lingual. We use the Variations technique. Though there are many points of it that I am not happy. This, I believe is one of the biggest shortcomings.

If you say to your users that they have to manage a separate list for each different language, they would laugh at you.

FlexListViewer is my saviour. It allow to have a view displayed in the other site than the one the list belongs to.

Better even, the source code is available. There was a small point of it that does not really fit to our need. We are in the usual, stagin -> internet facing setting. We prepare pages in the staging, and the Content Deployment pushes them to the internet facing site. The out-of-box FlexListViewer, the view URL needs to be a full URL, starting with http://hostname. But in our case, that changes, in the course of deployment. So, do not want to really specify any host.

Thanks to the developper, who made the source available. I modified it so if the specified URL starts with “/”, it assumes the host it is on.

Lock down the Internet facing Sharepoint site

There is such a command, but this post is not about that. It did not seem to me what I want.

I want to “lock down” the web site so nobody including me can modify it directly. We use the Content Deployment which pushes what we prepare, permission settings as well as contents, from the staging site to the internet facing site. So the modification should happen only at the staging site.

I said we copy the permissions. Our web site is very di-centralized. Every part has own group of people managing it. The management includes “targetting audiences”, restricting access in other words.

The solution I found is the “Policy for Web application”. It allows to say “Deny Write” for everybody.

This is good. Now even the site collection administrators can not modify it.

However then found that even the user used for the Content Deployment can not write into…

Then the solution found is to Extend the Web application, and define Internet zone for the actual service, Denying Write for everybody, while keeping the Default zone still writable.

I want to “lock down” the web site so nobody including me can modify it directly. We use the Content Deployment which pushes what we prepare, permission settings as well as contents, from the staging site to the internet facing site. So the modification should happen only at the staging site.

I said we copy the permissions. Our web site is very di-centralized. Every part has own group of people managing it. The management includes “targetting audiences”, restricting access in other words.

The solution I found is the “Policy for Web application”. It allows to say “Deny Write” for everybody.

This is good. Now even the site collection administrators can not modify it.

However then found that even the user used for the Content Deployment can not write into…

Then the solution found is to Extend the Web application, and define Internet zone for the actual service, Denying Write for everybody, while keeping the Default zone still writable.

Friday, September 5, 2008

Need to switch off local Firewall to allow passive mode FTP?

With IIS7, it is possible to specify a data port range of passive FTP.

This should be a good news for some Firewall administrators.

# I do not know if is was already possible with IIS6. I have never tried to have a serious FTP service on a Windows box.

However, I have the impression that we can not open a range of ports with the Windows local firewall.

Ridiculously, you can only specify a single number…

OK, then what about adding a program to the exception? The deamon process servicing FTP.

You know? With 2008, it appears that many services are running with just one executable, SVCHOST.EXE.

So for example,

C:\>tasklist /SVC

Image Name PID Services

============================================

…

svchost.exe 2880 ftpsvc

…

is the one for FTP I think.

But then if I try to add it to the exception, the system complains. OK, understand, it is almost the same as switching the firewall off…

So, after all this, my conclusion for the moment is that we switch off the local firewall to allow (default for many FTP clients I think) FTP Passive mode.

Follow-up on October 1, 2008:

Found a commnad to issue to “Activate firewall application filter for FTP (aka Stateful FTP) that will dynamically open ports for data connections”.

http://blogs.iis.net/jaroslad/archive/2007/09/29/windows-firewall-setup-for-microsoft-ftp-publishing-service-for-iis-7-0.aspx

This should be a good news for some Firewall administrators.

# I do not know if is was already possible with IIS6. I have never tried to have a serious FTP service on a Windows box.

However, I have the impression that we can not open a range of ports with the Windows local firewall.

Ridiculously, you can only specify a single number…

OK, then what about adding a program to the exception? The deamon process servicing FTP.

You know? With 2008, it appears that many services are running with just one executable, SVCHOST.EXE.

So for example,

C:\>tasklist /SVC

Image Name PID Services

============================================

…

svchost.exe 2880 ftpsvc

…

is the one for FTP I think.

But then if I try to add it to the exception, the system complains. OK, understand, it is almost the same as switching the firewall off…

So, after all this, my conclusion for the moment is that we switch off the local firewall to allow (default for many FTP clients I think) FTP Passive mode.

Follow-up on October 1, 2008:

Found a commnad to issue to “Activate firewall application filter for FTP (aka Stateful FTP) that will dynamically open ports for data connections”.

http://blogs.iis.net/jaroslad/archive/2007/09/29/windows-firewall-setup-for-microsoft-ftp-publishing-service-for-iis-7-0.aspx

Hide Root web from Breadcrumb navigation

I do not think I am the only one who suffered from this.

If you use the Variations technique to do a multi-lingual site and you want to have a Breadcrumb navigation there.

Then, what you get is: root web > Home > site1 …

But actually you do not want the “root web” AT ALL.

With the Variations, it is just a redirection to one of the Variations root, depending on language setting of the user.

If I have my PC setup language being to French, I get: root web > Accueil > site1 … # “Accueil” means “Home”. It is our top page for French speakers.

So to me, the “root web” appearing there is completely stupid.

I thought it is an everybody’s problem but could not find anybody have come to solve it. I needed to find one myself. Here it is.

I extended the builtin PortalSiteMapProvider, overriding its method GetParentNode() so it returns null for those Variations root node; Home, Accueil etc.

If you use the Variations technique to do a multi-lingual site and you want to have a Breadcrumb navigation there.

Then, what you get is: root web > Home > site1 …

But actually you do not want the “root web” AT ALL.

With the Variations, it is just a redirection to one of the Variations root, depending on language setting of the user.

If I have my PC setup language being to French, I get: root web > Accueil > site1 … # “Accueil” means “Home”. It is our top page for French speakers.

So to me, the “root web” appearing there is completely stupid.

I thought it is an everybody’s problem but could not find anybody have come to solve it. I needed to find one myself. Here it is.

I extended the builtin PortalSiteMapProvider, overriding its method GetParentNode() so it returns null for those Variations root node; Home, Accueil etc.

Labels:

Breadcrumb navigation,

CMS,

MOSS,

Sharepoint,

Variations

Wednesday, July 9, 2008

User security information cannot be properly imported without setting UserInfoDateTime option to ImportAll.

I setup a Content Deployment between my staging and production publishing sites, and got this warning.

For the Content Deployment path, I said All to Security Information. I want to deploy security information (such as ACLs, roles, and membership). This is part of our requirements.

Also, unchecked Deploy user names, following http://technet.microsoft.com/en-us/library/cc263468.aspx.

It reads “… to protect the identities of your authors, disable the Deploy user names setting when you configure the content deployment path.”

Then, it turned out that the above mentioned error, or warning comes from this.

I think I was luckly to find http://msdn.microsoft.com/en-us/library/aa981161.aspx.

The title does not sound relevent, but in the code sample, you see:

// Retain author name during import; change if needed

importSettings.UserInfoDateTime = SPImportUserInfoDateTimeOption.ImportAll;

Why in order to “Retain author name during import”, we need to set UserInfoDateTimeOption to All… Do not ask me…

So I check the Deploy user names, then voila, the warning is gone.

For the Content Deployment path, I said All to Security Information. I want to deploy security information (such as ACLs, roles, and membership). This is part of our requirements.

Also, unchecked Deploy user names, following http://technet.microsoft.com/en-us/library/cc263468.aspx.

It reads “… to protect the identities of your authors, disable the Deploy user names setting when you configure the content deployment path.”

Then, it turned out that the above mentioned error, or warning comes from this.

I think I was luckly to find http://msdn.microsoft.com/en-us/library/aa981161.aspx.

The title does not sound relevent, but in the code sample, you see:

// Retain author name during import; change if needed

importSettings.UserInfoDateTime = SPImportUserInfoDateTimeOption.ImportAll;

Why in order to “Retain author name during import”, we need to set UserInfoDateTimeOption to All… Do not ask me…

So I check the Deploy user names, then voila, the warning is gone.

Monday, June 30, 2008

Make a change to an application that uses the Data Access Layer technique

I think I like this technique in general. I have to admit that the learning curve was high. But once you got used to, I think you would become liking it. Especially if you are a guy like me, who had an ASP doing everying. No “three tier”… but who on the other hand, like OO (object oriented) programming.

As always with a tool like this, a RAD, it is less complicated when you create a brand-new app. But today, I need to add some new fields to the DetailView, to the database table behind.

Of course, you first add the column to the database table.

Then with the TableAdapter representing the table, you should first adapt the main select query. You should not add the column to the DataTable yourself. VS does that for you.

When the change is saved, VS asks you whether you want the update command too adapted. You may find it useful, if you do not do anything special with the update statement, but if you do, ATTENTION, with this auto adaptation, your manual editing of the update statement will be all gone. By the way, it apapts the insert command too, when you say YES.

I had the following difficulty.

As said, I added some new columns to an existing table. One of them was a BOOLEAN field. A bit column in SQL.

I added a CheckBox to the DetailedView for it, and got error saying unable to convert DBNull to BOOLEAN.

OK. Understand. For all existing data it is NULL because I created the column to accept NULL... wait a minute… I could have set the default value…

Anyway, I made it work by setting it to FALSE in the DAL class. First get data in the DataTable calling a GET method of the TableAdapter, then set the field to FALSE if the original value is NULL.

As always with a tool like this, a RAD, it is less complicated when you create a brand-new app. But today, I need to add some new fields to the DetailView, to the database table behind.

Of course, you first add the column to the database table.

Then with the TableAdapter representing the table, you should first adapt the main select query. You should not add the column to the DataTable yourself. VS does that for you.

When the change is saved, VS asks you whether you want the update command too adapted. You may find it useful, if you do not do anything special with the update statement, but if you do, ATTENTION, with this auto adaptation, your manual editing of the update statement will be all gone. By the way, it apapts the insert command too, when you say YES.

I had the following difficulty.

As said, I added some new columns to an existing table. One of them was a BOOLEAN field. A bit column in SQL.

I added a CheckBox to the DetailedView for it, and got error saying unable to convert DBNull to BOOLEAN.

OK. Understand. For all existing data it is NULL because I created the column to accept NULL... wait a minute… I could have set the default value…

Anyway, I made it work by setting it to FALSE in the DAL class. First get data in the DataTable calling a GET method of the TableAdapter, then set the field to FALSE if the original value is NULL.

Thursday, June 19, 2008

PointFire

Still, how to do a multi-ligual site.

My boss asked me to evaluate a package called PointFire. Although it turned out not really something we are looking for, it may be interesting for others who have a different set of requirements than ours. But it is not free. I myself find it a little expensive. Please ask directly the vendor about the price.

It does on-the-fly translation. It is a httpmodule, so the translation covers all output, regardless where it is produced; masterpage, layout and contents.

Its user guide reads that for all phrases that Sharepoint uses out-of-the-box like “My Site”, “Site Actions”, it has a built-in dictionary, so Sharepoint own navigation, configuration pages etc are translated.

What do you think happens if you have the sequence of words like “Site Actions” in the middle of your contents? Nothing, no traslation, except that you tag just that two words i.e. <span>Site Actions</span>.

You “register” your own words and phrases that you want the module to translate, into a List called Multilingual Translations created when the module is activated. There I registered the original English phrase “Hello, my name is”, together with its French translation “Bonjour, je m’appele”. And the key is the same. You need to tag a phrase, for it to be translated. I did not see it written anywhere in the userguide though.

Voila, mon rapport bref sur FirePoint. J’espère qu’il vous donne quelque aide…

My boss asked me to evaluate a package called PointFire. Although it turned out not really something we are looking for, it may be interesting for others who have a different set of requirements than ours. But it is not free. I myself find it a little expensive. Please ask directly the vendor about the price.

It does on-the-fly translation. It is a httpmodule, so the translation covers all output, regardless where it is produced; masterpage, layout and contents.

Its user guide reads that for all phrases that Sharepoint uses out-of-the-box like “My Site”, “Site Actions”, it has a built-in dictionary, so Sharepoint own navigation, configuration pages etc are translated.

What do you think happens if you have the sequence of words like “Site Actions” in the middle of your contents? Nothing, no traslation, except that you tag just that two words i.e. <span>Site Actions</span>.

You “register” your own words and phrases that you want the module to translate, into a List called Multilingual Translations created when the module is activated. There I registered the original English phrase “Hello, my name is”, together with its French translation “Bonjour, je m’appele”. And the key is the same. You need to tag a phrase, for it to be translated. I did not see it written anywhere in the userguide though.

Voila, mon rapport bref sur FirePoint. J’espère qu’il vous donne quelque aide…

Wednesday, June 18, 2008

Re: Sharepoint Variations: Not what I am looking for?

I posted this question to MSDN forum. And Below is the answer.

“Actually when the page is published it creates a new minor version in all sub variations. It's not overwriting any translated content, because it is a different version of that page.

It's then up to the owner of the content in that target variation to merge any changes into translated content. That's not something you can do by machine, so it's handled by versioning.”

Yes! You are absolutely right. There, you really sometimes find what you are looking for.

Then if the story is really like what I was telling you, just one typo in English, all you have to do at those target sites is to restore the previous version.

Actually, we should not be forced to do this though… There should be a way to correct English typo without affecting others… wait a minute… there may be a way…

I will have a look and report what I found here.

“Actually when the page is published it creates a new minor version in all sub variations. It's not overwriting any translated content, because it is a different version of that page.

It's then up to the owner of the content in that target variation to merge any changes into translated content. That's not something you can do by machine, so it's handled by versioning.”

Yes! You are absolutely right. There, you really sometimes find what you are looking for.

Then if the story is really like what I was telling you, just one typo in English, all you have to do at those target sites is to restore the previous version.

Actually, we should not be forced to do this though… There should be a way to correct English typo without affecting others… wait a minute… there may be a way…

I will have a look and report what I found here.

Tuesday, June 3, 2008

Sharepoint Variations: Not what I am looking for?

I think I am not going to use it… at least for the moment…. Disappointed very much…

Before, I quoted someone saying as:

“Changes you make to the source site will automatically appear in each target site.”

Ammm… yes, maybe… but in other words, it wipes out what existed previously in the target sites….

That is, OK when the page is first created, but when you then find a typo (one spelling mistake among a million of words), you correct it and re-publish.

The tanslator is emailed, opens the page in one of the target sites to find that… all translations he/she previously did, the million of words are all gone!!!

Before, I quoted someone saying as:

“Changes you make to the source site will automatically appear in each target site.”

Ammm… yes, maybe… but in other words, it wipes out what existed previously in the target sites….

That is, OK when the page is first created, but when you then find a typo (one spelling mistake among a million of words), you correct it and re-publish.

The tanslator is emailed, opens the page in one of the target sites to find that… all translations he/she previously did, the million of words are all gone!!!

Tuesday, May 27, 2008

Reverse proxy from Sharepoint site

I have been using ISAPI Rewrite 3.0 http://www.helicontech.com/isapi_rewrite/ for URL rewriting at IIS.

I think this is a great tool. Especially for those who use Apache and its Rewrite module for URL rewrite. The sysntax is the same so you can copy your httpd.conf, the part you do some URL rewrite, over to IIS and it works.

It does proxy too. But unfortunately I found it not work with Sharepoint.

This is because Sharepoint uses "Wildcard application maps". Below is extract from its help.

"This table lists ISAPI applications that are executed before a Web file is processed, regardless of the file name extension. These ISAPI applications are called wildcard script maps. Using wildcard script maps is similar to using ISAPI filters, ..."

I removed it and saw what happens. Bingo. My reverse proxy started working. However, now Sharepoint does not behave as before, so I had to put it back.

The ISAPI_Rewrite.dll works with Sharepoint, because it is an isapi filter, so it gets the requests first.ISAPI_RewriteProxy.dll does not, because it is implemented as an isapi extension, rather than a filter.

Request to .rwhlp URL. The aspnet_isapi.dll, which is registered as the wildcard map, takes and returns 404 because it does not exist…

It is really sad. If the proxy too is implemented as an isapi filter, it should work well also with Sharepoint, which I believe is increasing rapidly its share in the market.

I think this is a great tool. Especially for those who use Apache and its Rewrite module for URL rewrite. The sysntax is the same so you can copy your httpd.conf, the part you do some URL rewrite, over to IIS and it works.

It does proxy too. But unfortunately I found it not work with Sharepoint.

This is because Sharepoint uses "Wildcard application maps". Below is extract from its help.

"This table lists ISAPI applications that are executed before a Web file is processed, regardless of the file name extension. These ISAPI applications are called wildcard script maps. Using wildcard script maps is similar to using ISAPI filters, ..."

I removed it and saw what happens. Bingo. My reverse proxy started working. However, now Sharepoint does not behave as before, so I had to put it back.

The ISAPI_Rewrite.dll works with Sharepoint, because it is an isapi filter, so it gets the requests first.ISAPI_RewriteProxy.dll does not, because it is implemented as an isapi extension, rather than a filter.

Request to .rwhlp URL. The aspnet_isapi.dll, which is registered as the wildcard map, takes and returns 404 because it does not exist…

It is really sad. If the proxy too is implemented as an isapi filter, it should work well also with Sharepoint, which I believe is increasing rapidly its share in the market.

Friday, May 23, 2008

Re: Do not see the provisioned application aspx files in “Site Content and Structure”

The trick was the “type” attribute of <File> element that provisions the aspx.

Previously I had something like:

<Module>

<File Url="PageTemplate.aspx" Name="Default.aspx" Type="Ghostable"></File>

</Module>

With this, the aspx will not appear to the browser interface. I needed to change it to Type="GhostableInLibrary".

So what are the possible values and then what the use of each?

http://msdn.microsoft.com/en-us/library/ms459213.aspx explains the attribute:

“Optional Text. Specifies that the file be cached in memory on the front-end Web server. Possible values include Ghostable and GhostableInLibrary. Both values specify that the file be cached, but GhostableInLibrary specifies that the file be cached as part of a list whose base type is Document Library.

When changes are made, for example, to the home page through the UI, only the differences from the original page definition are stored in the database, while default.aspx is cached in memory along with the schema files. The HTML page that is displayed in the browser is constructed through the combined definition resulting from the original definition cached in memory and from changes stored in the database.”

That means that you have three choices; none (you can go without specifying any value because it is optional), Ghostable or GhostableInLibrary.

And the use of each… Let me stop here for now. I have not found a document explaining it throughly, nor had time to experiment it myself.

Previously I had something like:

<Module>

<File Url="PageTemplate.aspx" Name="Default.aspx" Type="Ghostable"></File>

</Module>

With this, the aspx will not appear to the browser interface. I needed to change it to Type="GhostableInLibrary".

So what are the possible values and then what the use of each?

http://msdn.microsoft.com/en-us/library/ms459213.aspx explains the attribute:

“Optional Text. Specifies that the file be cached in memory on the front-end Web server. Possible values include Ghostable and GhostableInLibrary. Both values specify that the file be cached, but GhostableInLibrary specifies that the file be cached as part of a list whose base type is Document Library.

When changes are made, for example, to the home page through the UI, only the differences from the original page definition are stored in the database, while default.aspx is cached in memory along with the schema files. The HTML page that is displayed in the browser is constructed through the combined definition resulting from the original definition cached in memory and from changes stored in the database.”

That means that you have three choices; none (you can go without specifying any value because it is optional), Ghostable or GhostableInLibrary.

And the use of each… Let me stop here for now. I have not found a document explaining it throughly, nor had time to experiment it myself.

Monday, May 19, 2008

Do not see the provisioned application aspx files in “Site Content and Structure”

Second post of the “ASP.NET application integration into a Sharepoint publishing portal” series.

Application page’s way is fine. I think I have got what I wanted. I have now integrated one of my ASP.NET apps into the Sharepoint portal.

Then moved onto others. There is one intranet application that I would like to integrate into our internal Sharepoint portal.

With it, I implemented its own menu system, controled via a sitemap xml.

It is access control (defined in the web.confg) trimmed. That it, a user would see only menu items that he/she has access.

Once integrated, all this should be already there, provided by the portal. You do not have to implement your own.

Easy!! I only have to locate the provisioned aspx file in the browser interface of Sharepoint and set the permission…

??? I do not see it in the browser interface, on the page titled “Site Content and Structure”...

Application page’s way is fine. I think I have got what I wanted. I have now integrated one of my ASP.NET apps into the Sharepoint portal.

Then moved onto others. There is one intranet application that I would like to integrate into our internal Sharepoint portal.

With it, I implemented its own menu system, controled via a sitemap xml.

It is access control (defined in the web.confg) trimmed. That it, a user would see only menu items that he/she has access.

Once integrated, all this should be already there, provided by the portal. You do not have to implement your own.

Easy!! I only have to locate the provisioned aspx file in the browser interface of Sharepoint and set the permission…

??? I do not see it in the browser interface, on the page titled “Site Content and Structure”...

Monday, May 12, 2008

Feature for an ASP.NET application

This is first post of the “ASP.NET application integration into a Sharepoint publishing portal” series.

Unlike the way I deployed my CreatePage.aspx-like page (see http://murmurofawebmaster.blogspot.com/2008/05/how-to-have-something-like.html), this time I prepare a Feature for this.

The main difference (to me, at least for the moment) is the masterpage.

With this ASP.NET app, I want to have the masterpage I prepared for my internet facing site. Therefore, it i.e. the aspx page of the app is provisioned through the Feature to the site. If it sits in the LAUOUTS folder, not provisioned into a site, it does not have the masterpage defined for the site.

I did:

1. Created an assembly implementing the code behind class as well as other helper classes. This goes to the Bin folder of the portal.

2. The aspx now inherits a class in the assembly.

3. A Feature to provision, instanciate the aspx to the site, when the Feature is activated.

Unlike the way I deployed my CreatePage.aspx-like page (see http://murmurofawebmaster.blogspot.com/2008/05/how-to-have-something-like.html), this time I prepare a Feature for this.

The main difference (to me, at least for the moment) is the masterpage.

With this ASP.NET app, I want to have the masterpage I prepared for my internet facing site. Therefore, it i.e. the aspx page of the app is provisioned through the Feature to the site. If it sits in the LAUOUTS folder, not provisioned into a site, it does not have the masterpage defined for the site.

I did:

1. Created an assembly implementing the code behind class as well as other helper classes. This goes to the Bin folder of the portal.

2. The aspx now inherits a class in the assembly.

3. A Feature to provision, instanciate the aspx to the site, when the Feature is activated.

ASP.NET application integration into a Sharepoint publishing portal

This is not going to a one-time post to explain the A to Z, but it is really my murmur. I find the solution (I hope I could) as I find problems.

It seems to be a concensus that to this i.e. ASP.NET application integration into Sharepoint, web parts is the way to go.

Below is an extract from a blog post.

1. Convert each Asp.Net page into one or more Asp.Net User Controls.

2. Deploy the controls to the ControlTemplates directory.

3. For each page in the Asp.Net app, create a custom page layout containing its respective User Control(s).

4. Deploy the page layouts to the target site, and create a single page instance for each page layout.

But, frankly, I do not understand why. Why we go for the web parts solution.

We can run an arbitrary aspx page (with code behind class) in a Sharepoint site. This seems simpler to me.

From the next post, I will show you here the steps I took.

It seems to be a concensus that to this i.e. ASP.NET application integration into Sharepoint, web parts is the way to go.

Below is an extract from a blog post.

1. Convert each Asp.Net page into one or more Asp.Net User Controls.

2. Deploy the controls to the ControlTemplates directory.

3. For each page in the Asp.Net app, create a custom page layout containing its respective User Control(s).

4. Deploy the page layouts to the target site, and create a single page instance for each page layout.

But, frankly, I do not understand why. Why we go for the web parts solution.

We can run an arbitrary aspx page (with code behind class) in a Sharepoint site. This seems simpler to me.

From the next post, I will show you here the steps I took.

Friday, May 9, 2008

Charasters with accent are not displayed correctly

Our site is in multiple languages. We used to use the iso-8859-1 encoding but needed to move to utf-8 to include languages which use multi-bytes characters like Chinese. But Japanese, my mother tague was not included though…

I want to share with you today the experience, problem and the solution, that we had at the transition.

One day a developper came saying that with his ASP.NET app, characters with accent are not displayed correctly.

I looked into and found that he had the following in the masterpage that he uses by mistake. He did it copying & pasting from an old page.

<meta http-equiv="Content-Type" content="text/html; charset=iso-8859-1" />

But at the same time, he had this in the web.config because his site is also in languages with multi-byte characters.

<globalization fileEncoding="utf-8" requestEncoding="utf-8" responseEncoding="utf-8"/>

We correceted the mistake.

<meta http-equiv="Content-Type" content="text/html; charset=utf-8" />

But the accents still do not come. We spent time looking for hints on the net, and found that the file itself was saved in iso-8859-1.

Visual Studio saved it in iso-8859-1 at the beginning because the materpage it i.e. the aspx file refers to has this.

<meta http-equiv="Content-Type" content="text/html; charset=iso-8859-1" />

And it does not change it i.e. does not re-saves the file in the new character encoding, even after the mistake is corrected.

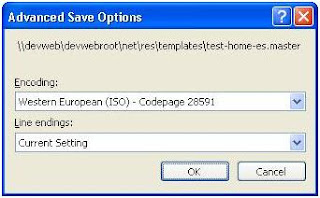

We have to explicitely re-save it in the correct encoding. Here is how to.

You go to “Save as” in the File menu, click the small arrow next to the Save button and choose “Save with Encoding…”.

I want to share with you today the experience, problem and the solution, that we had at the transition.

One day a developper came saying that with his ASP.NET app, characters with accent are not displayed correctly.

I looked into and found that he had the following in the masterpage that he uses by mistake. He did it copying & pasting from an old page.

<meta http-equiv="Content-Type" content="text/html; charset=iso-8859-1" />

But at the same time, he had this in the web.config because his site is also in languages with multi-byte characters.

<globalization fileEncoding="utf-8" requestEncoding="utf-8" responseEncoding="utf-8"/>

We correceted the mistake.

<meta http-equiv="Content-Type" content="text/html; charset=utf-8" />

But the accents still do not come. We spent time looking for hints on the net, and found that the file itself was saved in iso-8859-1.

Visual Studio saved it in iso-8859-1 at the beginning because the materpage it i.e. the aspx file refers to has this.

<meta http-equiv="Content-Type" content="text/html; charset=iso-8859-1" />

And it does not change it i.e. does not re-saves the file in the new character encoding, even after the mistake is corrected.

We have to explicitely re-save it in the correct encoding. Here is how to.

You go to “Save as” in the File menu, click the small arrow next to the Save button and choose “Save with Encoding…”.

And choose Unicode on the window opened. # That ISO comes originally tells us that the file is in ISO now.

Friday, May 2, 2008

How to have something like _layout/CreatePage.aspx of our own

Recently I learnt rather many things thanks to a kind guy I met at MSDN forum, and one of which is this; how to have something like _layout/CreatePage.aspx of your own.

My motivation behind was the following.

I want to have some properties defined with sites. In the very much same sense that we have custom columns, or fields with content types.

I guess many has the same demand.

I thought that an application page could do, and this is going to be very similar to the CreatePage.aspx in the following sense.

- Invoked from the Site Actions menu, and

- Invoked under each site’s context and does what it is supposed to do for the site.

Therefore, the URL has to be http://host/(path to the site)/_layout/theAppPage.aspx.

To do so, you have the manifest.xml as below.

<Solution>

<TemplateFiles>

<TemplateFile Location="LAYOUTS\theAppPage.aspx" />

</TemplateFiles>

</Solution>

When deployed, this will create the aspx file into the C:\Program Files\Common Files\Microsoft Shared\web server extensions\12\TEMPLATE\LAYOUTS folder, and it becomes available at a URL like above.

Cool!!

My motivation behind was the following.

I want to have some properties defined with sites. In the very much same sense that we have custom columns, or fields with content types.

I guess many has the same demand.

I thought that an application page could do, and this is going to be very similar to the CreatePage.aspx in the following sense.

- Invoked from the Site Actions menu, and

- Invoked under each site’s context and does what it is supposed to do for the site.

Therefore, the URL has to be http://host/(path to the site)/_layout/theAppPage.aspx.

To do so, you have the manifest.xml as below.

<Solution>

<TemplateFiles>

<TemplateFile Location="LAYOUTS\theAppPage.aspx" />

</TemplateFiles>

</Solution>

When deployed, this will create the aspx file into the C:\Program Files\Common Files\Microsoft Shared\web server extensions\12\TEMPLATE\LAYOUTS folder, and it becomes available at a URL like above.

Cool!!

How to get error detail with SharePoint

http://www.keirgordon.com/2007/02/sharepoint-error-detail.html

Let me copy it here too, since the above may one day become unavilable.

In addition to < customerrors mode="Off"/>, which everybody including me could guess, you have to do <SafeMode CallStack="true">.

Let me copy it here too, since the above may one day become unavilable.

In addition to < customerrors mode="Off"/>, which everybody including me could guess, you have to do <SafeMode CallStack="true">

Wednesday, April 30, 2008

“please wait while scripts are loaded…”

I really need to write this… I spent, wasted more than one full day just to sort this small thing…

We implement our corporate page template into a MOSS masterpage.

Copy and paste the html code. Try it first with a blank page layout, not even the page content area. Fine. Looks good and easy.

Add the page content field to the center of layout, to start with.

This time, after creating a content page based on the layout, we type in some text into the page content area at the center.

Do the Preview from the Tools menu. The text I typed DISAPPEAR!! Try to save without previewing. The same. The text is gone… From here, my long debugging exercise started.

It is fine without our page template, the HTML code pasted into the masterpage.

With the template, I noticed that when change the page to edit mode, the status bar of the browser reads “please wait while scripts are loaded…”

Looks something going wrong with javascript codes generated.

A Sharepoint generated page contains tens of thousand lines of javascript. You need a debugger like FireBug to debug this.

We need to either 1 authenticate FireFox against Sharepoint or 2 find a good javascript debugger for IE. Luckily I found the later.

Thanks god! The debugger found the line causing the error. It was in a js file called /_layout/1033/HtmlEditor.js, at its line 5772.

var displayContentElement=document.getElementById(clientId+"_displayContent");

…

var findForm=displayContentElement;

while (findForm.tagName!="FORM" && findForm.tagName!="WINDOW")

{

findForm=findForm.parentElement;

}

findForm.attachEvent("onsubmit",new Function("RTE2_TransferContentsToTextArea('"+clientId+"');"));

At run time, the clientId+"_displayContent” is the id of <div> element for the page content textarea. This code adds an event handler for the form submission, to save the text typed. However, with our page template pasted, the findForm.parentElement does not return. It can not find the <form id=”aspnetForm”> element to add the event handler. Why?

I had the impression that it is probably the <form> element for the search box that we have with the page template, though it does not really make sense because it is properly closed, should not interfere others.

But it was it. Having removed it, my simple page with our corporate page template now works. We think about how to have the search box back later.

To me, the lesson here is that, if you are not lucky and trapped with a problem such as this, it is really difficult to debug it with Sharepoint MOSS.

We implement our corporate page template into a MOSS masterpage.

Copy and paste the html code. Try it first with a blank page layout, not even the page content area. Fine. Looks good and easy.

Add the page content field to the center of layout, to start with.

This time, after creating a content page based on the layout, we type in some text into the page content area at the center.

Do the Preview from the Tools menu. The text I typed DISAPPEAR!! Try to save without previewing. The same. The text is gone… From here, my long debugging exercise started.

It is fine without our page template, the HTML code pasted into the masterpage.

With the template, I noticed that when change the page to edit mode, the status bar of the browser reads “please wait while scripts are loaded…”

Looks something going wrong with javascript codes generated.

A Sharepoint generated page contains tens of thousand lines of javascript. You need a debugger like FireBug to debug this.

We need to either 1 authenticate FireFox against Sharepoint or 2 find a good javascript debugger for IE. Luckily I found the later.

Thanks god! The debugger found the line causing the error. It was in a js file called /_layout/1033/HtmlEditor.js, at its line 5772.

var displayContentElement=document.getElementById(clientId+"_displayContent");

…

var findForm=displayContentElement;

while (findForm.tagName!="FORM" && findForm.tagName!="WINDOW")

{

findForm=findForm.parentElement;

}

findForm.attachEvent("onsubmit",new Function("RTE2_TransferContentsToTextArea('"+clientId+"');"));

At run time, the clientId+"_displayContent” is the id of <div> element for the page content textarea. This code adds an event handler for the form submission, to save the text typed. However, with our page template pasted, the findForm.parentElement does not return. It can not find the <form id=”aspnetForm”> element to add the event handler. Why?

I had the impression that it is probably the <form> element for the search box that we have with the page template, though it does not really make sense because it is properly closed, should not interfere others.

But it was it. Having removed it, my simple page with our corporate page template now works. We think about how to have the search box back later.

To me, the lesson here is that, if you are not lucky and trapped with a problem such as this, it is really difficult to debug it with Sharepoint MOSS.

Tuesday, April 29, 2008

Feature and Solution, why we need them?

A Feature is a customization unit that you could activate on a selective unit of SharePoint; a site, a web application, a farm etc. And Solution is packaging framework for Features.

Actually, some built-in “features” of SharePoint are delivered to you as Features.

Built-in masterpages and layouts for Publishing portal for instance are found at C:\Program Files\Common Files\Microsoft Shared\web server extensions\12\TEMPLATE\FEATURES\PublishingLayouts\MasterPages\.

Likewise, if you create a Solution package containing the masterpage you designed as a Feature, you could deploy it (quite easily indeed) to other instance of SharePoint.

What happens if you do NOT use Feature and Solution? Your customizations? Content types you created with the browser-based interface, layouts you designed with SharePoint Designer etc?

Answer (at least of the moment) is that customization done in that way i.e. thru browser and SPD, goes into database, from which you can not extract and deploy them selectively to other instances of SharePoint. For example, you design your masterpage with SPD, to database. You can not make it available to other instances of SharePoint than the one you created it against, unless you copy and paste the codes manually.

Even worse for contents types. You can not do any copy and paste with them.

For Features, you define them in XML. There are the schema defined.

However, what is painful is that you have to do this all manually. There is no tool yet available to generate them from content database.

Having said that, I do not know if this would be a (major) problem for people like us who maintain own web sites, not consultants or anything who develop solutions for clients.

We have a staging site and production site. Between, we establish Content Deployment job(s). It has been already proven that this “publishes” masterpages and layouts too; everything necessary for content pages to appear correctly, except for assemblies and other file-based customization.

So, what’s wrong if we do all customization to the staging site using browser interface and SPD and they all appear nicely on the production site, without having to worry about deployment etc?

And even, I now wonder if we still need this old "staging site and production site" concept, structure or whatever you may call it.

SharePoint presents a page differently, approved version, draft etc. depending on who you are, where you come from. We might go with just one site.

We may still need the (BROKEN according to many) Content Deployment framework though to distribute contents among servers in a farm. I have not looked into this yet. Will share with you the experience once I had.

Follow-up on July 7 2008:

No, you do not need to setup a Content Deployment to sync contents among a farm.

Actually, it it nothing but obvious. For a farm, you have just one content database (per web application). All web servers in the farm connect to it. You do not need to synchronize anything.

Actually, some built-in “features” of SharePoint are delivered to you as Features.

Built-in masterpages and layouts for Publishing portal for instance are found at C:\Program Files\Common Files\Microsoft Shared\web server extensions\12\TEMPLATE\FEATURES\PublishingLayouts\MasterPages\.

Likewise, if you create a Solution package containing the masterpage you designed as a Feature, you could deploy it (quite easily indeed) to other instance of SharePoint.

What happens if you do NOT use Feature and Solution? Your customizations? Content types you created with the browser-based interface, layouts you designed with SharePoint Designer etc?

Answer (at least of the moment) is that customization done in that way i.e. thru browser and SPD, goes into database, from which you can not extract and deploy them selectively to other instances of SharePoint. For example, you design your masterpage with SPD, to database. You can not make it available to other instances of SharePoint than the one you created it against, unless you copy and paste the codes manually.

Even worse for contents types. You can not do any copy and paste with them.

For Features, you define them in XML. There are the schema defined.

However, what is painful is that you have to do this all manually. There is no tool yet available to generate them from content database.

Having said that, I do not know if this would be a (major) problem for people like us who maintain own web sites, not consultants or anything who develop solutions for clients.

We have a staging site and production site. Between, we establish Content Deployment job(s). It has been already proven that this “publishes” masterpages and layouts too; everything necessary for content pages to appear correctly, except for assemblies and other file-based customization.

So, what’s wrong if we do all customization to the staging site using browser interface and SPD and they all appear nicely on the production site, without having to worry about deployment etc?

And even, I now wonder if we still need this old "staging site and production site" concept, structure or whatever you may call it.

SharePoint presents a page differently, approved version, draft etc. depending on who you are, where you come from. We might go with just one site.

We may still need the (BROKEN according to many) Content Deployment framework though to distribute contents among servers in a farm. I have not looked into this yet. Will share with you the experience once I had.

Follow-up on July 7 2008:

No, you do not need to setup a Content Deployment to sync contents among a farm.

Actually, it it nothing but obvious. For a farm, you have just one content database (per web application). All web servers in the farm connect to it. You do not need to synchronize anything.

Monday, April 28, 2008

Content Deployment “Timed Out”

When gone into daylight saving time (DST: this is really IT guys nightmare…) at 2am March 30th 2008, the content deployment stopped working. The result was always “Timed Out”. BTW, to find this, that it may be because of the time change, it took days already….

I found this hotfix http://support.microsoft.com/kb/938663/.

SharePoint’s own timer service, or shceduler, is so smart that it does not notice the time change. Please… Thus, a job scheduled to run at 8am, will not run till 9. When it finally runs, it is already “timed out.”

Since the hotfix in question (http://support.microsoft.com/kb/938535/) is included (http://support.microsoft.com/kb/942390), decided to go for SP1 instead.

One more final remark. Description of the hotfix reads as follows.

“The Windows SharePoint Timer service does not update its internal time when Microsoft Windows makes the transition from standard time to DST or from DST to standard time. Therefore, after you apply this hotfix, you must restart the Windows SharePoint Timer service after each transition from standard time to DST and after each transition from DST to standard time. If you do not restart the Windows SharePoint Timer service, timer jobs that you schedule may be delayed. Or, they may fail.”

I found this hotfix http://support.microsoft.com/kb/938663/.

SharePoint’s own timer service, or shceduler, is so smart that it does not notice the time change. Please… Thus, a job scheduled to run at 8am, will not run till 9. When it finally runs, it is already “timed out.”

Since the hotfix in question (http://support.microsoft.com/kb/938535/) is included (http://support.microsoft.com/kb/942390), decided to go for SP1 instead.

One more final remark. Description of the hotfix reads as follows.

“The Windows SharePoint Timer service does not update its internal time when Microsoft Windows makes the transition from standard time to DST or from DST to standard time. Therefore, after you apply this hotfix, you must restart the Windows SharePoint Timer service after each transition from standard time to DST and after each transition from DST to standard time. If you do not restart the Windows SharePoint Timer service, timer jobs that you schedule may be delayed. Or, they may fail.”

Thursday, April 24, 2008

Web Statistics; NetIQ WebTrends vs Awstats

The organisation I work for uses NetIQ WebTrends.

We bought it some years back and have not upgraded it ever since, so I do not know its latest version. The version we use is its eBuisiness edition, version 6.1.

The first thing I noticed was the long time it requires to process log data, especially after we enabled the geographical analysis. Several minutes for just one day.

Our site is a rather busy site, had 1,952,538 hits on Monday for instance, which means you have such number of records in a log file to process. # Actually, it first has to unzip it. This takes some time already.

So it may sound understandable, you may even think it is doing a good job and ask what is the problem.

The problem is that it needs to process the log files, each time you create a new “profile”, to analyze a new thing.

With a profile, you define the “filter” among other things. A filter typically is a URL path, of which you want to have statistics.

For example, this blogger.com server hosts many sites including mine, murmurofawebmaster.blogspot.com. To prepare access statistics of my site, you have to “filter” accesses to this site from the whole gigantic blogger.com server log data.

Imagine that you are towards end of the year, and need to prepare statistics for one particular URL, of the whole year.

For me, it takes even like 3 days. Today, people will not understand that, they will think you are damn.

So we looked at possible alternatives, namely Awstats. I think WebTrends and Awstats are the most famous two in this domain of web log analysis.

First, we listed points that we like and dislike WebTrends.

Good points/Advantage:

G1. Delegation of management

G2. Present geographical distribution of access

Points we dislike:

B(ad)1. A “profile” has to be created in order to see statistics of a subsite independently

B2. Take time for a profile to be ready to be seen

A colleague even said that the way we use it is probably wrong, and there should be a way to make it ready instantly.

B3. The way the profiles are presented/organized. They are just being added chaotically…

B4. Really a black box. An analysis fails without a trace of why it failed.

B5. For something not documented, or that you can not find the explanation, now stuck, because of no valid license. # We decided not to keep its support contract.

Awstats. A perl based freeware.

G1: There is no concept of delegation of management. You administrator need to edit its text config file. -> Disadvantage

G2: Its documentation says possible. But I did not try. The environment I used for this evaluation misses not just one but several required perl libraries. -> Let us say Equal

B1, B2 and B3 -> Equal, or WebTrends is slightly better to me.

I think processing of log data is a time consuming task with all packages in general.

I fed just one day log to Awstats and it took some time (like 10min or so, even more) for it to “digest” even that.

And to have statistics for a subsite, just like with WebTrends, you have to have the specific “profile”, process the log data against the profile and have the result in a separate data store, a database of some sort.

The main difference I found between WebTrends and Awstats with respect to ways to handle series of log data is the following.

With Awstas, it is your responsibility to make sure not to feed it with the same data twice. I think this can be a bit tricky especially when you want to (re-)analyze all past logs. You yourself have to do some programming to achieve this. It is only simple when you feed logs as they are created.

On the other hand, WebTrends remember the full path of log files it has already processed. Therefore, you normally specify a folder and tell it to process everything there. Very simple too when processes all log of this year, today for instance.

B4 and B5 -> Equal

We can not really tell, until we seriously start using it, whether the Awstats is robust, a good set of info for trouble shooting are handy etc.

However, in case of a problem, as with the case with other popular open source packages, I think we could find on the net, people already had that problem and overcome.

With WebTrends, on the contrary, we can not rely on the net. But if you have the support contract, the support guys are there to help you out of whatever problem.

In short, we did not see any significant gain we could have with Awstats, that we do not have with WebTrends. So we decided to stay with WebTrends. We have been using it for some years already (though with some un-satisfaction), and thus we have some level of understanding, know-how, experience etc with it.

We bought it some years back and have not upgraded it ever since, so I do not know its latest version. The version we use is its eBuisiness edition, version 6.1.

The first thing I noticed was the long time it requires to process log data, especially after we enabled the geographical analysis. Several minutes for just one day.

Our site is a rather busy site, had 1,952,538 hits on Monday for instance, which means you have such number of records in a log file to process. # Actually, it first has to unzip it. This takes some time already.

So it may sound understandable, you may even think it is doing a good job and ask what is the problem.

The problem is that it needs to process the log files, each time you create a new “profile”, to analyze a new thing.

With a profile, you define the “filter” among other things. A filter typically is a URL path, of which you want to have statistics.

For example, this blogger.com server hosts many sites including mine, murmurofawebmaster.blogspot.com. To prepare access statistics of my site, you have to “filter” accesses to this site from the whole gigantic blogger.com server log data.

Imagine that you are towards end of the year, and need to prepare statistics for one particular URL, of the whole year.

For me, it takes even like 3 days. Today, people will not understand that, they will think you are damn.

So we looked at possible alternatives, namely Awstats. I think WebTrends and Awstats are the most famous two in this domain of web log analysis.

First, we listed points that we like and dislike WebTrends.

Good points/Advantage:

G1. Delegation of management

G2. Present geographical distribution of access

Points we dislike:

B(ad)1. A “profile” has to be created in order to see statistics of a subsite independently

B2. Take time for a profile to be ready to be seen

A colleague even said that the way we use it is probably wrong, and there should be a way to make it ready instantly.

B3. The way the profiles are presented/organized. They are just being added chaotically…

B4. Really a black box. An analysis fails without a trace of why it failed.

B5. For something not documented, or that you can not find the explanation, now stuck, because of no valid license. # We decided not to keep its support contract.

Awstats. A perl based freeware.

G1: There is no concept of delegation of management. You administrator need to edit its text config file. -> Disadvantage

G2: Its documentation says possible. But I did not try. The environment I used for this evaluation misses not just one but several required perl libraries. -> Let us say Equal

B1, B2 and B3 -> Equal, or WebTrends is slightly better to me.

I think processing of log data is a time consuming task with all packages in general.

I fed just one day log to Awstats and it took some time (like 10min or so, even more) for it to “digest” even that.

And to have statistics for a subsite, just like with WebTrends, you have to have the specific “profile”, process the log data against the profile and have the result in a separate data store, a database of some sort.

The main difference I found between WebTrends and Awstats with respect to ways to handle series of log data is the following.

With Awstas, it is your responsibility to make sure not to feed it with the same data twice. I think this can be a bit tricky especially when you want to (re-)analyze all past logs. You yourself have to do some programming to achieve this. It is only simple when you feed logs as they are created.

On the other hand, WebTrends remember the full path of log files it has already processed. Therefore, you normally specify a folder and tell it to process everything there. Very simple too when processes all log of this year, today for instance.

B4 and B5 -> Equal

We can not really tell, until we seriously start using it, whether the Awstats is robust, a good set of info for trouble shooting are handy etc.

However, in case of a problem, as with the case with other popular open source packages, I think we could find on the net, people already had that problem and overcome.

With WebTrends, on the contrary, we can not rely on the net. But if you have the support contract, the support guys are there to help you out of whatever problem.

In short, we did not see any significant gain we could have with Awstats, that we do not have with WebTrends. So we decided to stay with WebTrends. We have been using it for some years already (though with some un-satisfaction), and thus we have some level of understanding, know-how, experience etc with it.

Google Analytics

How many visitors do I have? Looks like this blogger.com does not tell me that.

So I decided to try the Google Analytics. Luckly blogger.com does allows us to insert the required javascript snippets into our blogs.

So far (as anticipated) number of hit I have with this site is really, really small. I need to put more interesting stuff here to draw your attention.

So I decided to try the Google Analytics. Luckly blogger.com does allows us to insert the required javascript snippets into our blogs.

So far (as anticipated) number of hit I have with this site is really, really small. I need to put more interesting stuff here to draw your attention.

Tuesday, April 22, 2008

Cynthia Says

This morning I went to a workshop. It is about to make web site accessible to people with disabilitty.The presentor was Cynthia of http://www.cynthiasays.com/, looks like a very famous person in this area.

I have worked on the topic a little already. My boss asked me to go through the W3C standard and do a summary.It is about stop using <table> to layout the page, or put the labels properly to all input fields of your form etc.

So not many new things came out today’s session. Still, I picked up these interesting from the Q&A session.

PDF is a image, not readable by screen readers.

There are some improvement made by Adobe recently but it is still basically a snapshot image of a document.This is really new to me. I was thinking that it is more like PostsScript than snapshot.

As you may have heard, the Captcha technique is becoming an issue in this context. Our site uses it too.

Today, she presented a couple of possible solutions to it, which include to have audio file next to it. Sounds cool to me.

I have worked on the topic a little already. My boss asked me to go through the W3C standard and do a summary.It is about stop using <table> to layout the page, or put the labels properly to all input fields of your form etc.

So not many new things came out today’s session. Still, I picked up these interesting from the Q&A session.

PDF is a image, not readable by screen readers.

There are some improvement made by Adobe recently but it is still basically a snapshot image of a document.This is really new to me. I was thinking that it is more like PostsScript than snapshot.

As you may have heard, the Captcha technique is becoming an issue in this context. Our site uses it too.

Today, she presented a couple of possible solutions to it, which include to have audio file next to it. Sounds cool to me.

Friday, April 18, 2008

Massive SEO poisoning

The story started this blog post. http://ddanchev.blogspot.com/2008/04/unicef-too-iframe-injected-and-seo.html

A guy in the organization I work for, who can be noisy at times, happens to be a subscriber of the feed and bought this to our attentions.

I am the webmaster there, officially titled to be so. So I have to do, or say at least something, when things are brought up this way.

I read the post, hard to understand. Frankly, I do not know if I got the full idea still.

SEO (I did not know the abbreviation) seemingly stands for Search Engine Optimization. In short, in this context, it points to the fact that search engines give higher ranking to pages from “high profile sites”.

Then, IFRAME injection (I did not know that this was getting that popular either) basically is to inject malicious contents using the well-known XSS (Cross Site Scripting) vulnerability.

So I said to the guy that for our site, the XSS was looked into, so we are safe. In reality, you can not be really safe. But you need sometime to be diplomatic, bureaucratic…

The one thing I still do not really get is that, then how to have those injected URLs indexed by google.

According to some posts I found on the net, those malicious guys publish millions of pages tagged with keywords, where they have links to those injected URLs. Google robot comes and is tricked that the injected URLs mentioned at many place for those keywords. It indexes it with a high ranking because it is from a “high profile site”.

A guy in the organization I work for, who can be noisy at times, happens to be a subscriber of the feed and bought this to our attentions.

I am the webmaster there, officially titled to be so. So I have to do, or say at least something, when things are brought up this way.

I read the post, hard to understand. Frankly, I do not know if I got the full idea still.

SEO (I did not know the abbreviation) seemingly stands for Search Engine Optimization. In short, in this context, it points to the fact that search engines give higher ranking to pages from “high profile sites”.

Then, IFRAME injection (I did not know that this was getting that popular either) basically is to inject malicious contents using the well-known XSS (Cross Site Scripting) vulnerability.

So I said to the guy that for our site, the XSS was looked into, so we are safe. In reality, you can not be really safe. But you need sometime to be diplomatic, bureaucratic…

The one thing I still do not really get is that, then how to have those injected URLs indexed by google.

According to some posts I found on the net, those malicious guys publish millions of pages tagged with keywords, where they have links to those injected URLs. Google robot comes and is tricked that the injected URLs mentioned at many place for those keywords. It indexes it with a high ranking because it is from a “high profile site”.

Thursday, April 17, 2008

FTP : Active vs. Passive

I keep forgetting this but the occasions I need to understand it keep coming back. Like when I programmed a simple FTP web-based client (I expected I could find a free AJAXed control or something like that, but could not) or help our network guys to do some maintenance. So let me have a brief summary of things good to know/remember so that I do not have to google it yet again…

This is really nice summary I found at http://www.cert.org/tech_tips/ftp_port_attacks.html.

“A client opens a connection to the FTP control port (port 21) of an FTP server. So that the server will be later able to send data back to the client machine, a second (data) connection must be opened between the server and the client.

To make this second connection, the client sends a PORT command to the server machine. This command includes parameters that tell the server which IP address to connect to and which port to open at that address - in most cases this is intended to be a high numbered port on the client machine.

The server then opens that connection, with the source of the connection being port 20 on the server and the destination being the port identified in the PORT command parameters.

The PORT command is usually used only in the "active mode" of FTP, which is the default. It is not usually used in passive (also known as PASV [2]) mode. Note that FTP servers usually implement both modes, and the client specifies which method to use [3].”

Then what is the passive mode? I think this is compact and quick to read but detailed enough, from http://slacksite.com/other/ftp.html#passive.

“In order to resolve the issue of the server initiating the connection to the client a different method for FTP connections was developed. This was known as passive mode, or PASV, after the command used by the client to tell the server it is in passive mode.

In passive mode FTP the client initiates both connections to the server, solving the problem of firewalls filtering the incoming data port connection to the client from the server. When opening an FTP connection, the client opens two random unprivileged ports locally (N > 1023 and N+1). The first port contacts the server on port 21, but instead of then issuing a PORT command and allowing the server to connect back to its data port, the client will issue the PASV command. The result of this is that the server then opens a random unprivileged port (P > 1023) and sends the PORT P command back to the client. The client then initiates the connection from port N+1 to port P on the server to transfer data.”

This is really nice summary I found at http://www.cert.org/tech_tips/ftp_port_attacks.html.

“A client opens a connection to the FTP control port (port 21) of an FTP server. So that the server will be later able to send data back to the client machine, a second (data) connection must be opened between the server and the client.

To make this second connection, the client sends a PORT command to the server machine. This command includes parameters that tell the server which IP address to connect to and which port to open at that address - in most cases this is intended to be a high numbered port on the client machine.

The server then opens that connection, with the source of the connection being port 20 on the server and the destination being the port identified in the PORT command parameters.

The PORT command is usually used only in the "active mode" of FTP, which is the default. It is not usually used in passive (also known as PASV [2]) mode. Note that FTP servers usually implement both modes, and the client specifies which method to use [3].”

Then what is the passive mode? I think this is compact and quick to read but detailed enough, from http://slacksite.com/other/ftp.html#passive.

“In order to resolve the issue of the server initiating the connection to the client a different method for FTP connections was developed. This was known as passive mode, or PASV, after the command used by the client to tell the server it is in passive mode.

In passive mode FTP the client initiates both connections to the server, solving the problem of firewalls filtering the incoming data port connection to the client from the server. When opening an FTP connection, the client opens two random unprivileged ports locally (N > 1023 and N+1). The first port contacts the server on port 21, but instead of then issuing a PORT command and allowing the server to connect back to its data port, the client will issue the PASV command. The result of this is that the server then opens a random unprivileged port (P > 1023) and sends the PORT P command back to the client. The client then initiates the connection from port N+1 to port P on the server to transfer data.”

Wednesday, April 16, 2008

Develop custom authentication module

Last week, I enjoyed developping a DNN module specific to our own need.

I do not know how I can best describe it but… the DotNetNuke seems to be a rich framework that we can develop our own customizations on top.

Things, or facilities it exposes for our use inlucde the ease in having properties of the module.

Each module has the end-user interface and configuration interface for the admins to set it up.

You design the configuration interface, listing textbox, checkbox etc for properties of your module. And in the code behind class, you override one method which is called when the admin, after filling those textbox, checkbox etc, clicks the link to save the config change.

I mean, for all these, I do not have to worry about. The framework is there.

What you have to do is just to, taking one of the exsting modues as sample, change the design of the config or enduser intergface or both user controls, and code the event handler method(s).

In addition to that, there are fuctions provided by the framework to save and pick up the value given to a property, into and from database.

I do not know if all this is documented somewhere in a publicly available document. Will let you know if I found it.

I do not know how I can best describe it but… the DotNetNuke seems to be a rich framework that we can develop our own customizations on top.

Things, or facilities it exposes for our use inlucde the ease in having properties of the module.

Each module has the end-user interface and configuration interface for the admins to set it up.